The Challenge

Many teachers lack the skills and confidence to effectively integrate computer modeling and simulation into their science classrooms. Research studies show that it takes more than a single professional development workshop to build the necessary skill and confidence. Online communities have been proposed as a means to address this problem. Teachers with GUTS (Growing Up Thinking Scientifically) offers an engaging middle school curriculum and accompanying professional development for teachers.

Through the use of an online community of practice, Teachers with GUTS is able to support teachers in mitigating challenges, gaining expertise by providing additional training and resources, and providing answers to teachers; questions as they bring computational science experiences into their classrooms. To truly teach with GUTS, teachers needed a community they could turn to.

Social media platforms and existing online discussion forums were considered, but research showed that these platforms lacked the features specifically needed. Instead, they needed a platform tailored to their needs:

- Forum for topical discussions

- Resource library to share documents, videos, and code

- Events listing page

- Practice area for participating in monthly “challenges” or “work sessions”

Our Approach

Through our discovery work we specifically identified that a forum, resource library, practice space, and faceted search would provide teachers with support and resources and tools they need to teach the curricula with confidence. TWiG would then support the website with both drip and push marketing to promote the resources and encourage activity.

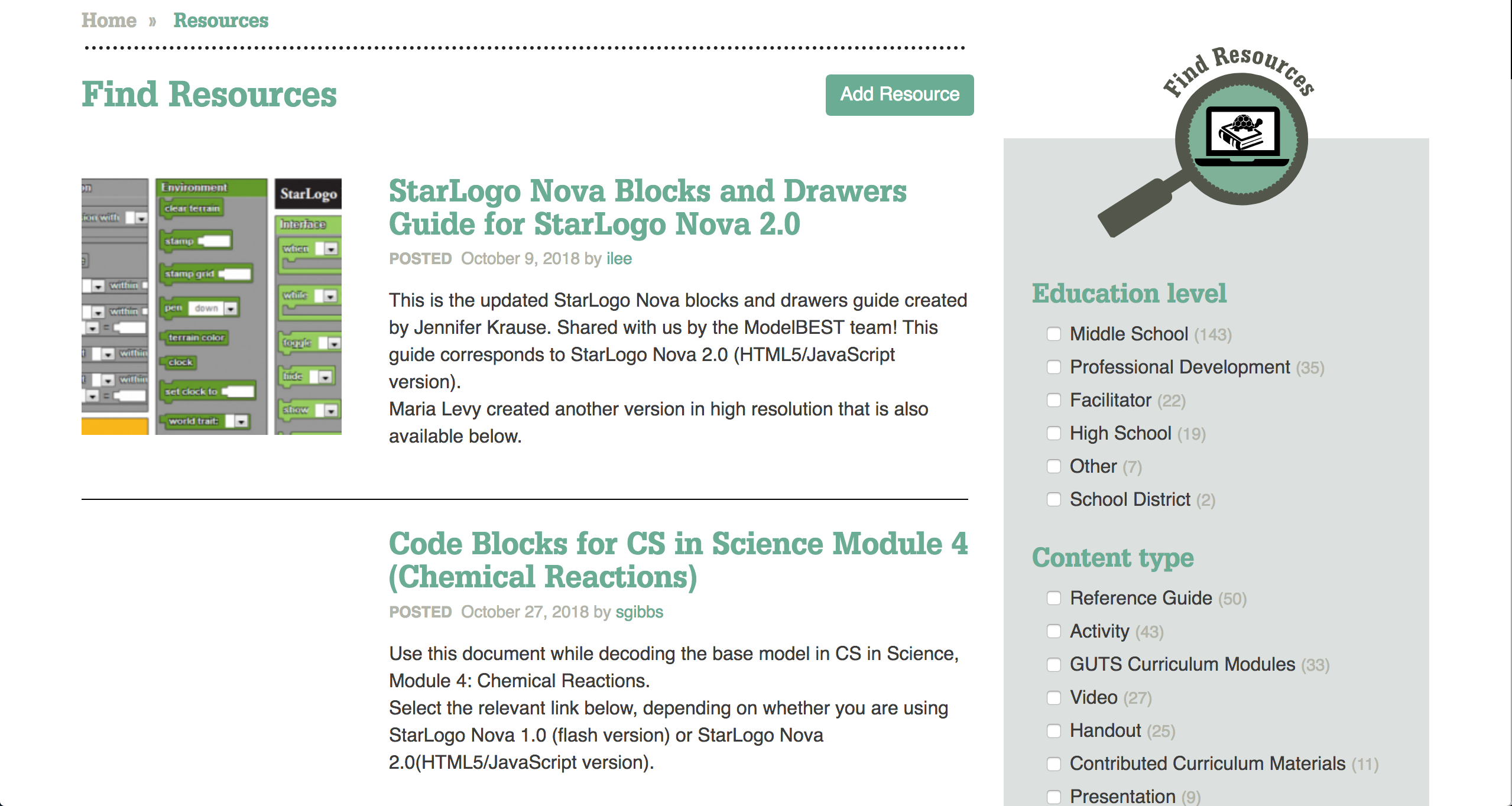

Resource Library

Project GUTS CS in Science curriculum is the heart of TWiG’s work. There are several learning modules as well as supporting documents such as rubrics and supplementary activities. We built the resource library that displayed the most recent additions front and center, while providing a faceted search option so teachers can drill down to specific resources.

We also took advantage of the collaborative spirit of the TWiG community by allowing teachers to submit their own resources. These resources are then reviewed by TWiG staff and published after review, revision if needed, and alignment with standards.

Faceted search allows teachers to find resources based on several criteria.

Faceted search allows teachers to find resources based on several criteria.

Forum

To support online discussion and peer assistance, we built a forum. We built off of Drupal Core’s Forum module and extended it to include an area accessible only to facilitators with a custom module.

We customized the forum further by disabling threaded comments, as that structure did not work well for the teachers using the site. However, in doing so we learned of a longstanding issue with Drupal and comments in which converting threaded comments to a flat structure risks deleting the nested comments. In response, we ported the Flat Comments module to Drupal 8.

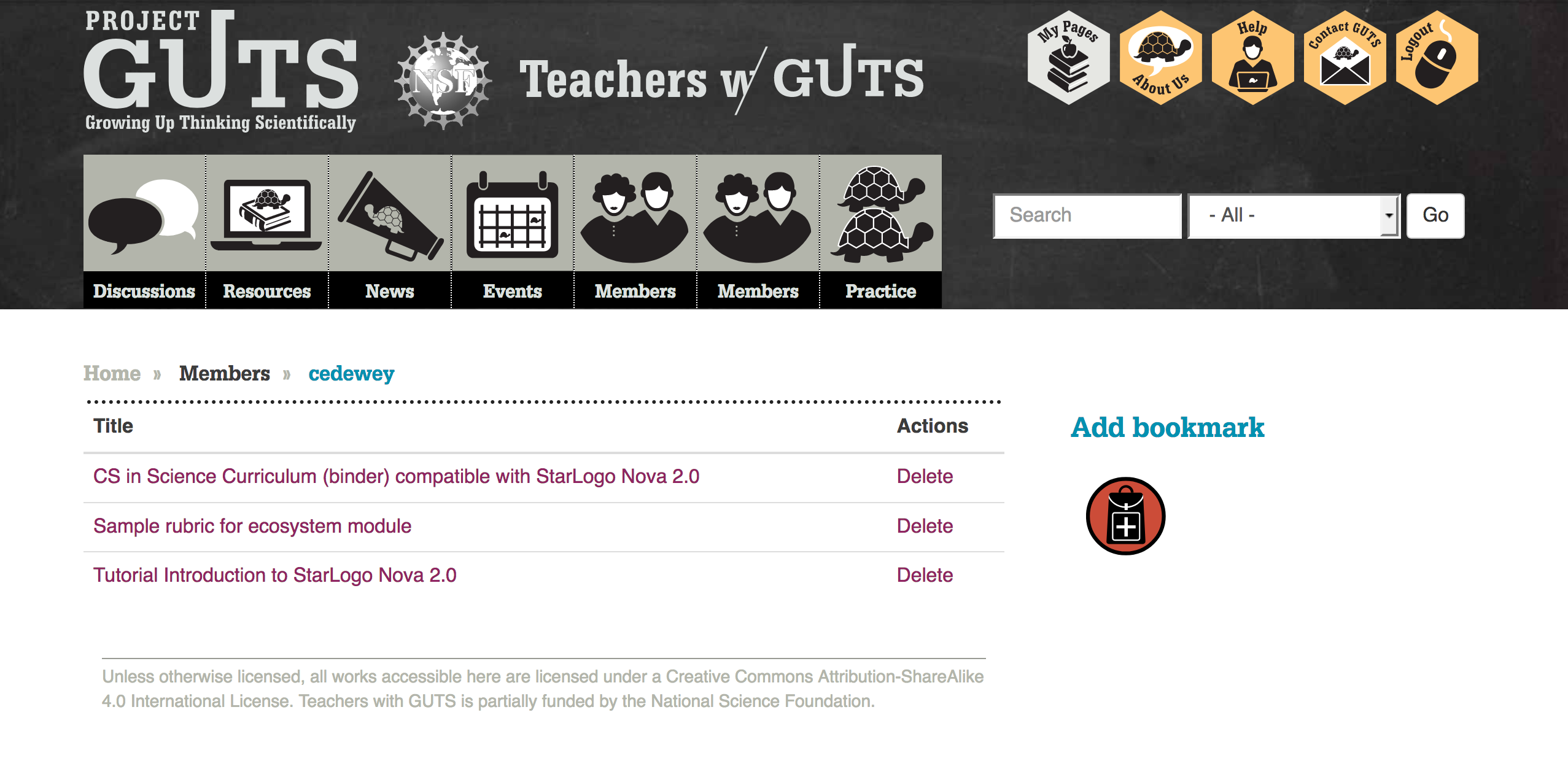

Bookmarks

Even with faceted search and thoughtful categorization, the number of resources and discussions on the site can be overwhelming. We created a Drupal 8 version of the Backpack module that allows teachers to save pages to their “backpack.”

These backpacks can either be private or shared with other members. Curating specific lists is another way teachers can share knowledge with one another.

You can download and use the Backpack module on your Drupal site at https://github.com/agaric/bookmark

Teachers can bookmark a resource or discussion for quick access in the future.

Teachers can bookmark a resource or discussion for quick access in the future.

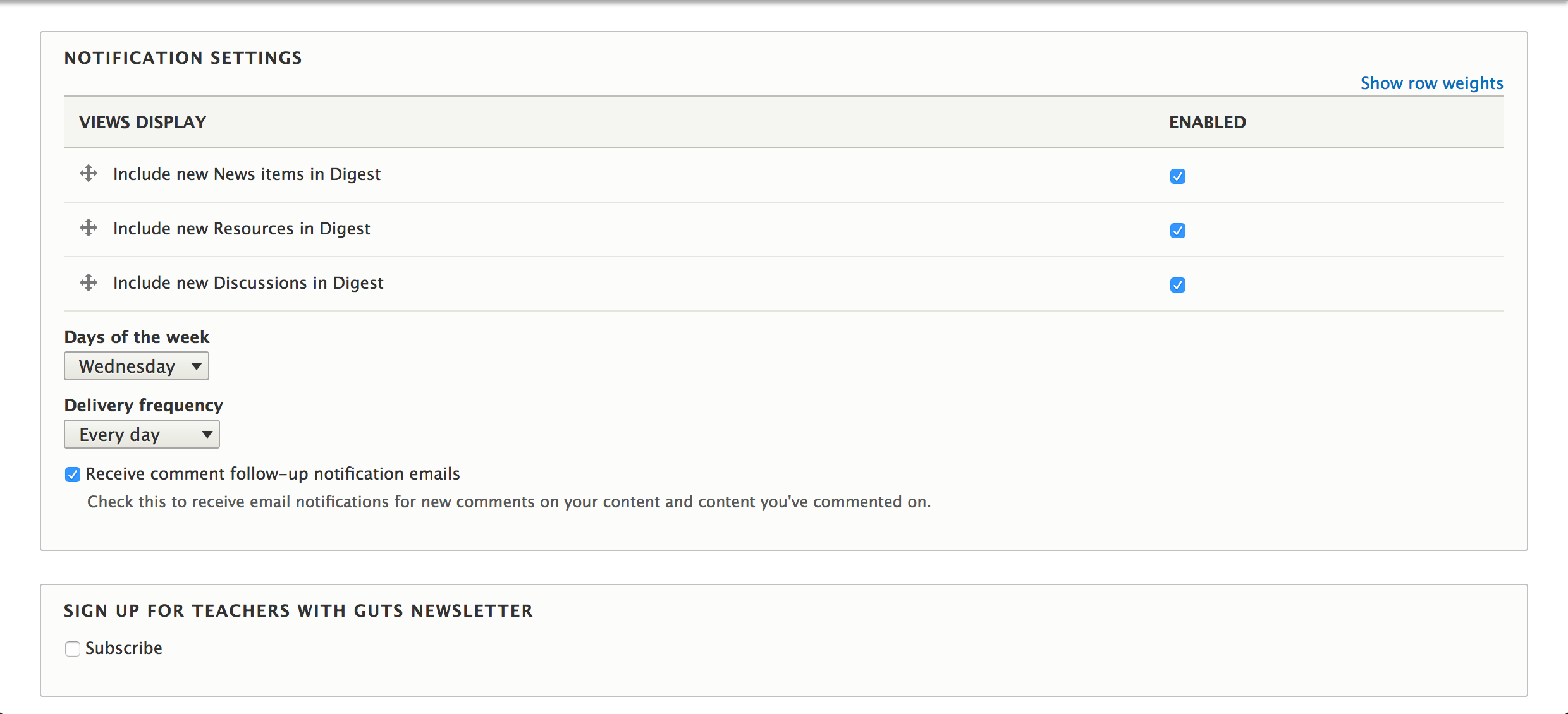

Notifications

While a custom site was more advantageous than an email list or Facebook group, the reality is that most teachers’ daily routine does not include visiting teacherswithguts.org. In order to keep teachers engaged they needed a way to know when relevant activity was taking place.

We built a notification system, configurable by each teacher, so that they could be emailed about activity pertinent to their work. Teachers can choose to receive the following notifications:

- When a new article is posted

- When a new resource is posted

- When a new discussion is posted

- When a comment is made on a discussion

Additionally, teachers choose the day to be sent emails, as well as the frequency. This was all made possible by enhancing the Personal Digest and Comment Notify modules.

We enhanced the Personal Digest and Comment Notify modules to give users fine grained control over their notifications.

We enhanced the Personal Digest and Comment Notify modules to give users fine grained control over their notifications.

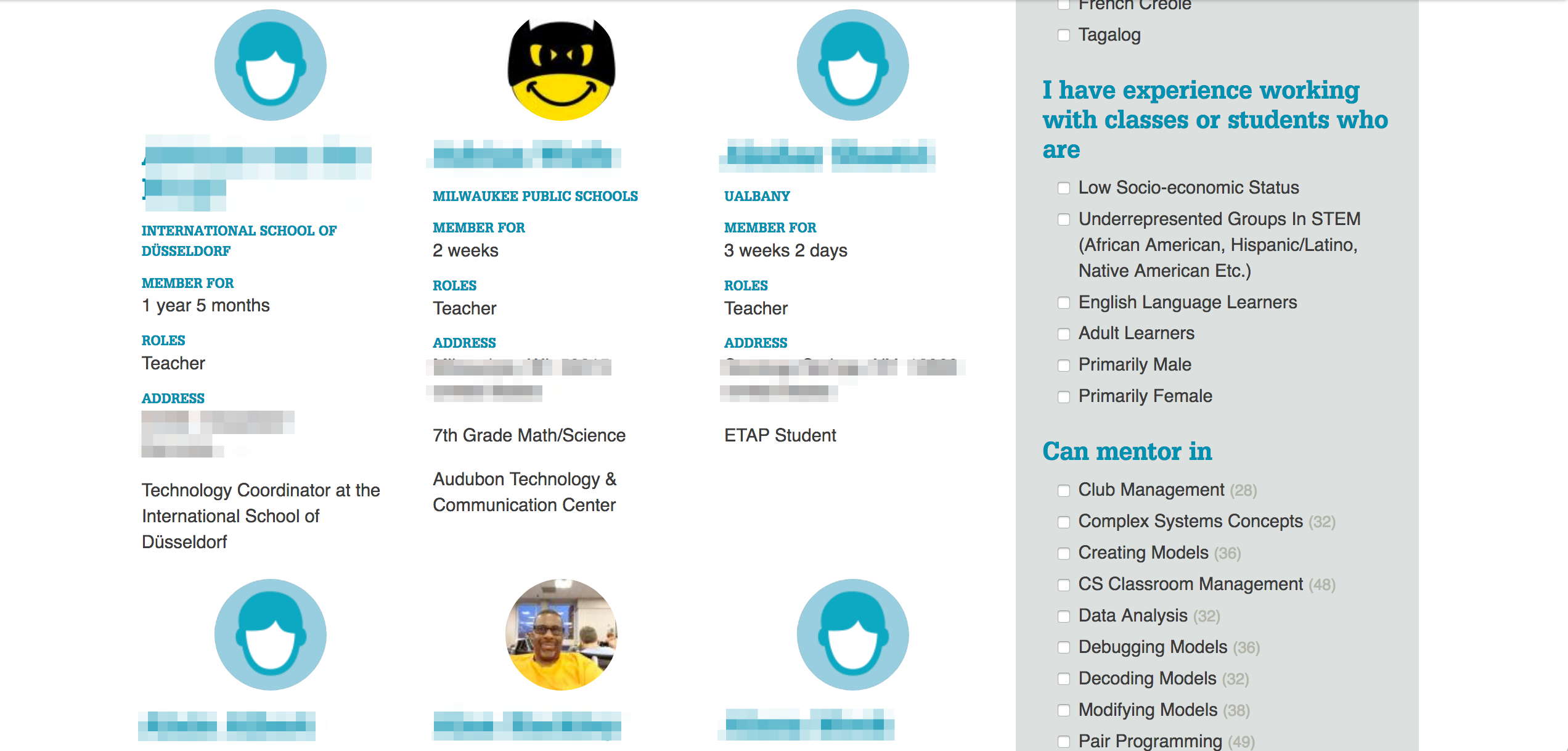

Member Directory

To build upon the networking happening in the forums, we made it easy for teachers to find one another based on shared on interests, experience and geography. Each teacher customizes their member profile with key information about themselves, their areas of expertise and the areas they wish to grow. We then surfaced that in a filterable search of members, helping teachers mentor one another.

Members can easily find others with similar interests in the member directory.

Members can easily find others with similar interests in the member directory.

Results

With an onboarding process much improved from their previous site, plus the ability to bulk invite users, TWiG was able to sign up over 700 teachers when the site launched in 2016. We’ve continued to improve the site to increase participation and surface useful metrics for site administrators and researchers.

Former school teacher turned technologist, Clayton now applies his background in linguistics, community organizing and web development as a user experience architect for Agaric and co-founder of Drutopia.

Clayton has worked with universities, open source companies, tech diversity advocates, prison abolitionists, and others to translate their organizational goals into impactful digital tools with meaningful content.

Aside from content strategy and information architecture, Clayton also enjoys being a goofy dad and always appreciates a good paraprosdokian.

Worker owned cooperatives are businesses owned and controlled by the people who work in them. This is a collection of resources that will help you navigate the world of cooperatives and opportunities.

This list will continue to grow and connect with cooperative networks around the globe.

Boston area:

- WORC'N, the Worker-Owned and Run Cooperative Network of Greater Boston is a network of worker-owned cooperatives, co-op developers, and those seeking support to start worker cooperatives.

- Boston Center for Community Ownership

National/international:

- US Federation of Worker Cooperatives

- Democracy at Work aims to build a social movement for a new society whose productive enterprises (offices, factories, and stores) will mostly be Worker Self-Directed Enterprises (WSDEs), a true economic democracy.

- North American Technology Worker Cooperatives

- Tech Coop How To - .pdf

Find worker cooperatives:

- Member directory of the US Federation of Worker Cooperatives

- Find.coop (open directory of all types of coops)

- ica.coop (open directory of all types of coops)

- Drupal Worker Cooperative group and overall Drupal coop group. See in particular Wiki of worker coops and resources.

Legal resources

News articles about cooperatives:

Presentations and speakers:

Cooperative Resources:

Some great events happened in 2014 based around building cooperatives and collectives. These events should have videos online of the speakers and events: California Cooperative Conference and Chicago Freedom Summer

Find It

Program Locator and

Event Discovery platform

Search a curated directory of events

Filter results based on age, location, cost, activity, and schedule.

Benjamin lives and works to connect people, ideas, and resources so more awesome things happen.

A web developer well-established with Drupal and PHP, he has also been enjoying programming projects with Django and Python. His work with Agaric clients has included universities (MIT and Harvard University), corporations (Backupify and GenArts), and not-for-profit organizations (Partners In Health and National Institute for Children's Health Quality).

Benjamin tries to aid struggles for justice and liberty, or try not to do harm in the meantime. He is an organizer of the People Who Give a Damn, recognized as an incorporated entity by the IRS and the Commonwealth of Massachusetts charities division. He has also supported several artistic and philanthropic ventures and was a founding, elected director to the Amazing Things Arts Center in Framingham, Massachusetts.

He led 34 authors in writing the 1,100 page Definitive Guide to Drupal 7, but he is probably still best known in the Drupal community for posting things he finds to data.agaric.com where developers running into the same challenges find, if not answers, comfort that they are not alone.

You can get more (too much) of ben on a cooperatively-run part of the fediverse at @mlncn@social.coop.

Turning off links to entities that have been Rabbit Holed

in modern Drupal

Mostrar y Contar

Comparte lo que has aprendido. Vea lo que otros están haciendo.

Today we are going to learn how to migrate users into Drupal. The example code will be explained in two blog posts. In this one, we cover the migration of email, timezone, username, password, and status. In the next one, we will cover creation date, roles, and profile pictures. Several techniques will be implemented to ensure that the migrated data is valid. For example, making sure that usernames are not duplicated.

Although the example is standalone, we will build on many of the concepts that had already been covered in the series. For instance, a file migration is included to import images used as profile pictures. This topic has been explained in detail in a previous post, and the example code is pretty similar. Therefore, no explanation is provided about the file migration to keep the focus on the user migration. Feel free to read other posts in the series if you need a refresher.

Getting the code

You can get the full code example at https://github.com/dinarcon/ud_migrations The module to enable is UD users whose machine name is ud_migrations_users. The two migrations to execute are udm_user_pictures and udm_users. Notice that both migrations belong to the same module. Refer to this article to learn where the module should be placed.

The example assumes Drupal was installed using the standard installation profile. Particularly, we depend on a Picture (user_picture) image field attached to the user entity. The word in parenthesis represents the machine name of the image field.

The explanation below is only for the user migration. It depends on a file migration to get the profile pictures. One motivation to have two migrations is for the images to be deleted if the file migration is rolled back. Note that other techniques exist for migrating images without having to create a separate migration. We have covered two of them in the articles about subfields and constants and pseudofields.

Understanding the source

It is very important to understand the format of your source data. This will guide the transformation process required to produce the expected destination format. For this example, it is assumed that the legacy system from which users are being imported did not have unique usernames. Emails were used to uniquely identify users, but that is not desired in the new Drupal site. Instead, a username will be created from a public_name source column. Special measures will be taken to prevent duplication as Drupal usernames must be unique. Two more things to consider. First, source passwords are provided in plain text (never do this!). Second, some elements might be missing in the source like roles and profile picture. The following snippet shows a sample record for the source section:

source:

plugin: embedded_data

data_rows:

- legacy_id: 101

public_name: 'Michele'

user_email: 'micky@example.com'

timezone: 'America/New_York'

user_password: 'totally insecure password 1'

user_status: 'active'

member_since: 'January 1, 2011'

user_roles: 'forum moderator, forum admin'

user_photo: 'P01'

ids:

legacy_id:

type: integerConfiguring the destination and dependencies

The destination section specifies that user is the target entity. When that is the case, you can set an optional md5_passwords configuration. If it is set to true, the system will take an MD5 hashed password and convert it to the encryption algorithm that Drupal uses. For more information password migrations refer to these articles for basic and advanced use cases. To migrate the profile pictures, a separate migration is created. The dependency of user on file is added explicitly. Refer to these articles more information on migrating images and files and setting dependencies. The following code snippet shows how the destination and dependencies are set:

destination:

plugin: 'entity:user'

md5_passwords: true

migration_dependencies:

required:

- udm_user_pictures

optional: []Processing the fields

The interesting part of a user migration is the field mapping. The specific transformation will depend on your source, but some arguably complex cases will be addressed in the example. Let’s start with the basics: verbatim copies from source to destination. The following snippet shows three mappings:

mail: user_email

init: user_email

timezone: user_timezoneThe mail, init, and timezone entity properties are copied directly from the source. Both mail and init are email addresses. The difference is that mail stores the current email, while init stores the one used when the account was first created. The former might change if the user updates its profile, while the latter will never change. The timezone needs to be a string taken from a specific set of values. Refer to this page for a list of supported timezones.

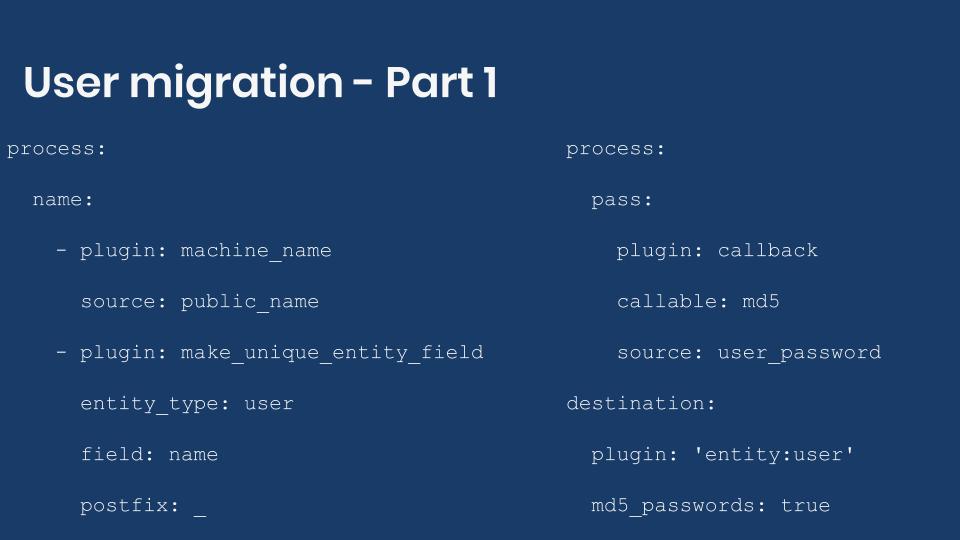

name:

- plugin: machine_name

source: public_name

- plugin: make_unique_entity_field

entity_type: user

field: name

postfix: _The name, entity property stores the username. This has to be unique in the system. If the source data contained a unique value for each record, it could be used to set the username. None of the unique source columns (eg., legacy_id) is suitable to be used as username. Therefore, extra processing is needed. The machine_name plugin converts the public_name source column into transliterated string with some restrictions: any character that is not a number or letter will be converted to an underscore. The transformed value is sent to the make_unique_entity_field. This plugin makes sure its input value is not repeated in the whole system for a particular entity field. In this example, the username will be unique. The plugin is configured indicating which entity type and field (property) you want to check. If an equal value already exists, a new one is created appending what you define as postfix plus a number. In this example, there are two records with public_name set to Benjamin. Eventually, the usernames produced by running the process plugins chain will be: benjamin and benjamin_1.

process:

pass:

plugin: callback

callable: md5

source: user_password

destination:

plugin: 'entity:user'

md5_passwords: trueThe pass, entity property stores the user’s password. In this example, the source provides the passwords in plain text. Needless to say, that is a terrible idea. But let’s work with it for now. Drupal uses portable PHP password hashes implemented by PhpassHashedPassword. Understanding the details of how Drupal converts one algorithm to another will be left as an exercise for the curious reader. In this example, we are going to take advantage of a feature provided by the migrate API to automatically convert MD5 hashes to the algorithm used by Drupal. The callback plugin is configured to use the md5 PHP function to convert the plain text password into a hashed version. The last part of the puzzle is set, in the process section, the md5_passwords configuration to true. This will take care of converting the already md5-hashed password to the value expected by Drupal.

Note: MD5-hash passwords are insecure. In the example, the password is encrypted with MD5 as an intermediate step only. Drupal uses other algorithms to store passwords securely.

status:

plugin: static_map

source: user_status

map:

inactive: 0

active: 1The status, entity property stores whether a user is active or blocked from the system. The source user_status values are strings, but Drupal stores this data as a boolean. A value of zero (0) indicates that the user is blocked while a value of one (1) indicates that it is active. The static_map plugin is used to manually map the values from source to destination. This plugin expects a map configuration containing an array of key-value mappings. The value from the source is on the left. The value expected by Drupal is on the right.

Technical note: Booleans are true or false values. Even though Drupal treats the status property as a boolean, it is internally stored as a tiny int in the database. That is why the numbers zero or one are used in the example. For this particular case, using a number or a boolean value on the right side of the mapping produces the same result.

In the next blog post, we will continue with the user migration. Particularly, we will explain how to migrate the user creation time, roles, and profile pictures.

What did you learn in today’s blog post? Have you migrated user passwords before, either in plain text or hashed? Did you know how to prevent duplicates for values that need to be unique in the system? Were you aware of the plugin that allows you to manually map values from source to destination? Please share your answers in the comments. Also, I would be grateful if you shared this blog post with others.

Next: Migrating users into Drupal - Part 2

This blog post series, cross-posted at UnderstandDrupal.com as well as here on Agaric.coop, is made possible thanks to these generous sponsors. Contact Understand Drupal if your organization would like to support this documentation project, whether it is the migration series or other topics.

Websites powered by Find It connect people to all that their communities have to offer. Developed under the guidance of the Cambridge Kids' Council on a Free and Open Source License, Find It ensures that people from all backgrounds can easily find activities, services, and resources.

Find It's design was informed by hundreds of hours of research, including over 1,250 resident surveys and over 120 interviews with service providers. Six years of research and development and continuous improvements has resulted in the Find It approach and platform, ready to be implemented in cities and communities around the world.

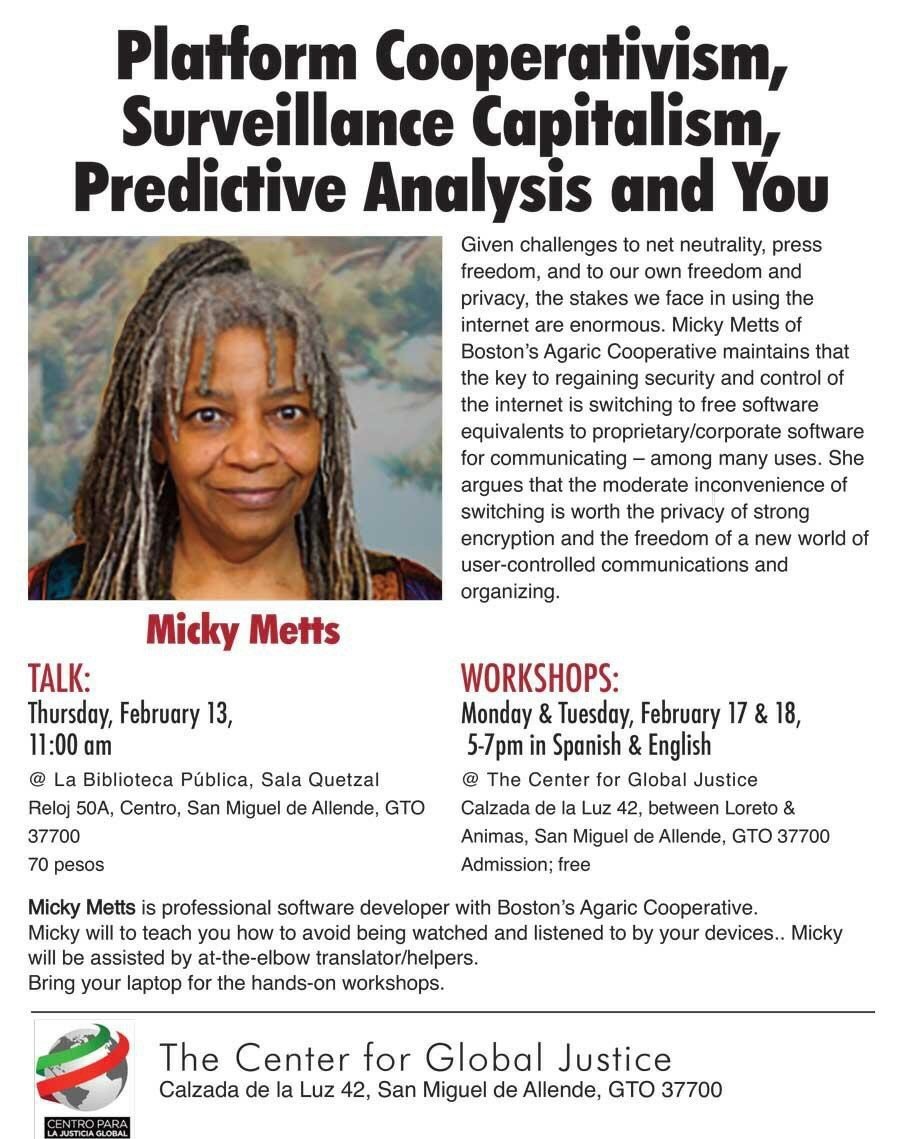

MASS, or Model of Architecture Serving Society, is a large – if not "massive" – non-profit collective of architects and designers who have done hundreds of impressive community engaged projects over 20 years of work. MASS’ approach of using design and architecture to engage communities and push for systemic social change resonates with our own approaches and helped make this collaboration close to our hearts.

Challenge

We first worked with MASS on their last major redesign in 2016–2017. We continued to provide maintenance and support for the ensuing decade, including design changes by a new art director. Continued staff turnover made us a key source of institutional knowledge about use of the site, yet it also meant that we no longer had a working relationship with design leadership. For this next major redesign, MASS leadership began work with a different, design-dominant firm that chose to develop the site themselves. We were using Drupal's powerful content export capabilities to provide the firm with data in exactly the format they needed and waiting to be told MASS would switch away from our site… when instead we were informed the competing firm was having difficulty both achieving functional parity with the old site—particularly ease of editing—and also with implementing their own design.

We took on the challenge.

Approach

Instead of building completely from scratch, we built the new site as an iteration from the previous one, allowing content to be kept in place or more easily migrated. Sticking with Drupal, we upgraded to the most current version and implemented a host of technological modernizations along the way as well (a lot changes happen out there over an 8 year span).

On the design front, the design changes kept coming. A major portion of the effort was led from a MASS internal team led by Bob Stone. Another outside consultant put his stamp on it. MASS leadership continued to make revisions.

Finally, Annie Yuxi Wang came in as a contractor to MASS and integrated the various design directions with her own amazing artistic sense and put the project on track to success. Annie provided us with Figma designs and mockups. (We have continued to work with Annie on subsequent client projects!)

Many constraints remained the same. Since MASS has important members and projects located well outside of the privileged world of fast internet (including rural and African projects), the decidedly image heavy site needed to be optimized for slower connections.

The main impact on our approach was continual design and functionality revisions. Symbolically, at the last minute the name of the organization changed, from MASS Design Group to (what "MASS" has always literally stood for) Model of Architecture Serving Society.

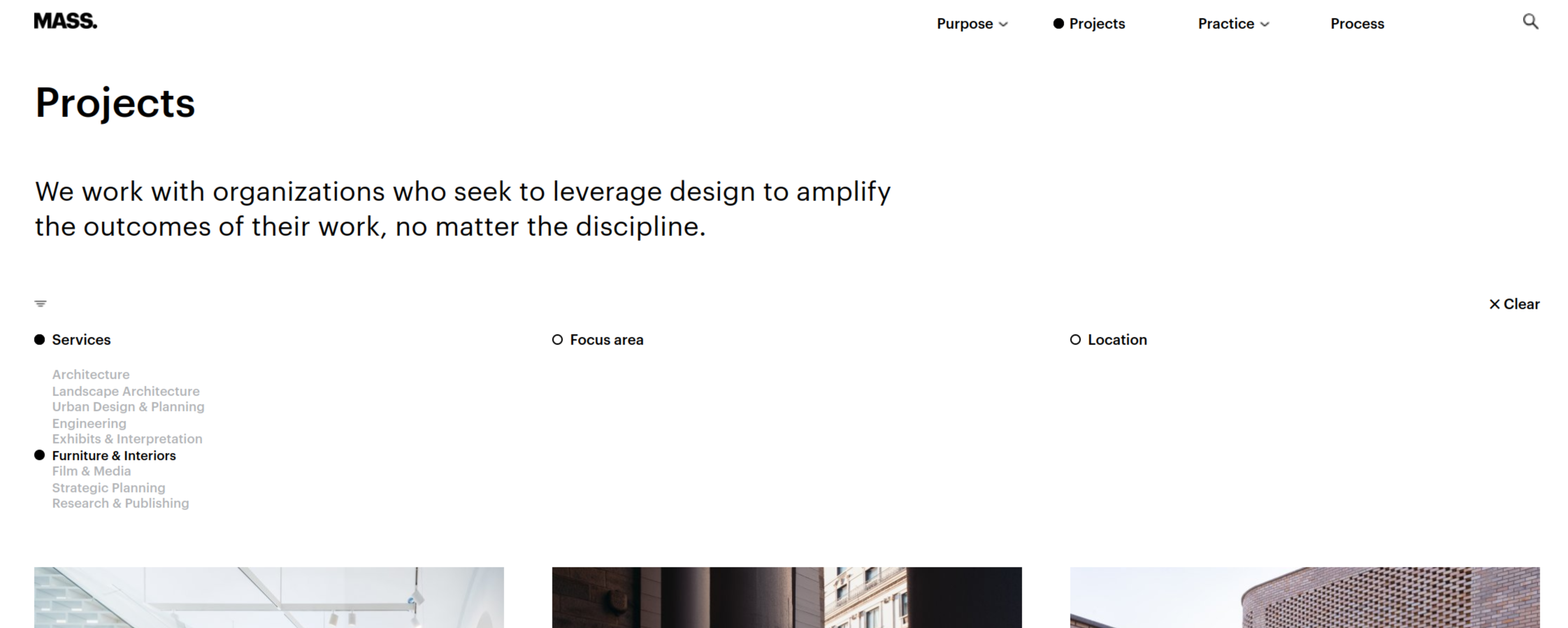

Design language

MASS designers worked with us closely driving the redesign with some great design leadership especially from Annie. The new design language included a number of elements recurring through the site subtly weaving a visual story:

The MASSive dot recurs through the site, small in the logo and then expands as a navigation hint and tab indicator used in Projects.

The MASSive dot in logo, menu indicator and marking active filters

The MASSive dot in logo, menu indicator and marking active filters

We incorporated the dot as an element to accompany headers built into the wysiwyg styles for editors.

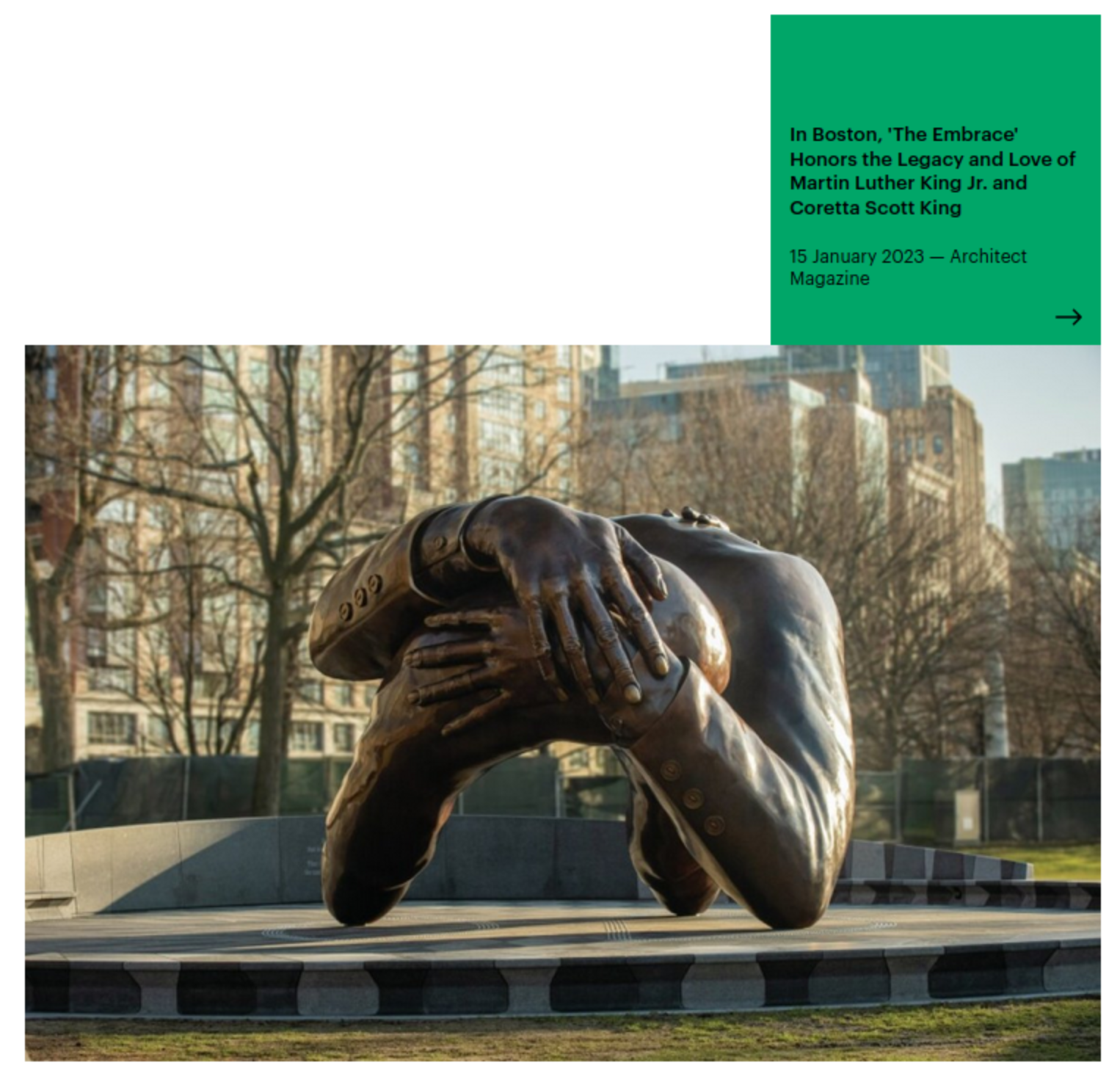

Postits hanging on the edge of images speak to the hands on work some of us remember from in person collaborative work, we recognize it from a collective brainstorm or the precariously attached reminder.

Post it note on display on the front page

Post it note on display on the front page

Near the latter stretch of the project, it became clear that the new clean look could benefit from an infusion of colors for certain pages (beyond the dominant MASS green). You can see this in action on the Purpose page where a theme color for the page is chosen from a color scheme pickable in the back end and is then repeated in select paragraphs and the footer.

These were worked into the Drupal user interface for content editors to spin up for their own page design. Mostly these elements were attached to paragraphs for liberal use anywhere on the site.

Some content types of course got their own treatment, notably our Person nodes designed to appear as a full page modal sometimes including their own modals on modals.

Workflow

With a lot of cooks in the editorial kitchen, MASS needed a solid tool for handling content in different states of completion. So we wrote the Content Moderation Tabs and Workflow buttons modules to meet the need. Depending on permissions granted, the editor has access to a row of buttons for sending the node into various states including Archive or Trash (for the powerful few), to create a new draft or publish/unpublish etc. This gives content editors some ability to spend time on updating or redesigning content behind the scenes without disrupting the site (or accidentally deleting content).

Contributing back

As the site progressed over time and Drupal itself saw its own technological shifts, working on MASS allowed us to meet new incompatibilities, edge cases and new needs with our own fixes or brand new modules which are now in the Drupal ecosystem for anyone to freely use.

Some of these include:

- the Give module to accept donations and payments.

- Content Moderation Tabs

- Workflow buttons

- Entity Reference Override

- and poetically named Display One Filter Another with Client-side Hierarchical Select

Along the way some of the new technologies we pulled in htmx (in use ubiquitously on the site), class based Drupal hooks, and a slew of new HTML and CSS breaking into mainstream browsers during development including <dialog> tags, CSS :has() selectors, and more.

Results

After a long process of design iterations, rethinks, and a good deal of feature creep the gorgeous new website finally launched in December of 2025, a couple days before MASS’ Abundant Futures event.

The growing community built around Drupal offers a multitude of options to easily integrate Drupal into your current lifestyle and to branch out in new and different ways. Here are a few ways to migrate your lifestyle to one as a Freelance Drupal Developer and a contributor to the community.

If you have a 9 to 5 job at an office or a specific location, you can choose to view online Drupal tutorials in whatever spare time you have instead of watching a movie. Drupal tutorials can be quite entertaining at times and are especially rewarding because they will change your life for the better.

Sign up for an account on Drupal.org and download the latest version of Drupal. Install Drupal locally on your hard drive and use some of the knowledge you learned in the tutorials. If you get stuck, log onto groups.drupal.org and search for an answer to your issue - chances are good that someone else has had the same issue and the solution will be posted there. As you search through the posts on the forum, be sure to respond to any posts that you may have the solution for. Since you have just gone through the install and setup, there is a good possibility that even as a beginner - you too may be able to help someone else. It's a good practice to do both things on a forum visit:

- Ask For Help

- Give Some Help

After a few weeks or months viewing tutorial videos and getting a solid idea of the moving parts within Drupal, you can start to create your first real site. I recommend that you involve a friend. Most people have at least one friend that has an idea for a business venture. Ask your friend if you may help them build a site based on their business idea. Enlist the friend to help you by inviting them over for a bowl of popcorn and a few Drupal movies...

A lot of people have asked us what 'agaric' means exactly, and why we chose to use it. Wikipedia describes agaric as a “type of fungal fruiting body characterized by...” blah blah blah (read the wiki)

Basically, a mushroom.

Why a mushroom you ask? Well, mushrooms are the 'fruiting bodies' of larger, sometimes vastly larger, mycelial networks. What's a mycelial network? In short, a network of mycelium, which are “the vegetative part of a fungus, consisting of a mass of branching, thread-like hyphae“ (read the wiki --> http://en.wikipedia.org/wiki/Mycelium)

Really, just read the wiki, it's cool, here's an excerpt:

A mycelium may be minute, forming a colony that is too small to see, or it may be extensive. "Is this the largest organism in the world? This 2,400-acre site in eastern Oregon had a contiguous growth of mycelium before logging roads cut through it. Estimated at 1,665 football fields in size and 2,200 years old, this one fungus has killed the forest above it several times over, and in so doing has built deeper soil layers that allow the growth of ever-larger stands of trees. Mushroom-forming forest fungi are unique in that their mycelial mats can achieve such massive proportions."

So there are these vast networks of interconnected life and all we really know of their existence without really close inspection is the fruiting bodies, the mushrooms or agarics. These networks are vital to ecosystems, they play an important role in the circle of nature and life, and in almost every case their presence can be proven to be beneficial in the long run.

We all know that the internet is a huge network of computers which covers a lot of the planet. The internet is also pretty much vital to our way of life these days as well, with emails and it being the information age and such... Here at Agaric Design, we see the phenomenon of the internet as an 'organism' of sorts, just like a mycelial network but on a planetary scale. The 'fruits' that this network produces are the websites out there that enhance our daily lives in some way. The ones that inform, connect, and produce positive change in the world.

With this vision in mind, we crack open our laptops everyday and do what we do, constantly learning more about this interweb thingy. We think that there's more to it than miles of wires and megawatts of pulsating electricity, it is more than just a sum of its parts. We think that the real potential of the internet still remains untapped...

We think we can help it fruit.

Today we will learn how to migrate content from a JSON file into Drupal using the Migrate Plus module. We will show how to configure the migration to read files from the local file system and remote locations. The example includes node, images, and paragraphs migrations. Let’s get started.

Note: Migrate Plus has many more features. For example, it contains source plugins to import from XML files and SOAP endpoints. It provides many useful process plugins for DOM manipulation, string replacement, transliteration, etc. The module also lets you define migration plugins as configurations and create groups to share settings. It offers a custom event to modify the source data before processing begins. In today’s blog post, we are focusing on importing JSON files. Other features will be covered in future entries.

Getting the code

You can get the full code example at https://github.com/dinarcon/ud_migrations The module to enable is UD JSON source migration whose machine name is ud_migrations_json_source. It comes with four migrations: udm_json_source_paragraph, udm_json_source_image, udm_json_source_node_local, and udm_json_source_node_remote.

You can get the Migrate Plus module using composer: composer require 'drupal/migrate_plus:^5.0'. This will install the 8.x-5.x branch where new development will happen. This branch was created to introduce breaking changes in preparation for Drupal 9. As of this writing, the 8.x-4.x branch has feature parity with the newer branch. If your Drupal site is not composer-based, you can download the module manually.

Understanding the example set up

This migration will reuse the same configuration from the introduction to paragraph migrations example. Refer to that article for details on the configuration: the destinations will be the same content type, paragraph type, and fields. The source will be changed in today's example, as we use it to explain JSON migrations. The end result will again be nodes containing an image and a paragraph with information about someone’s favorite book. The major difference is that we are going to read from JSON. In fact, three of the migrations will read from the same file. The following snippet shows a reduced version of the file to get a sense of its structure:

{

"data": {

"udm_people": [

{

"unique_id": 1,

"name": "Michele Metts",

"photo_file": "P01",

"book_ref": "B10"

},

{...},

{...}

],

"udm_book_paragraph": [

{

"book_id": "B10",

"book_details": {

"title": "The definite guide to Drupal 7",

"author": "Benjamin Melançon et al."

}

},

{...},

{...}

],

"udm_photos": [

{

"photo_id": "P01",

"photo_url": "https://agaric.coop/sites/default/files/pictures/picture-15-1421176712.jpg",

"photo_dimensions": [240, 351]

},

{...},

{...}

]

}

}Note: You can literally swap migration sources without changing any other part of the migration. This is a powerful feature of ETL frameworks like Drupal’s Migrate API. Although possible, the example includes slight changes to demonstrate various plugin configuration options. Also, some machine names had to be changed to avoid conflicts with other examples in the demo repository.

Migrating nodes from a JSON file

In any migration project, understanding the source is very important. For JSON migrations, there are two major considerations. First, where in the file hierarchy lies the data that you want to import. It can be at the root of the file or several levels deep in the hierarchy. Second, when you get to the array of records that you want to import, what fields are going to be made available to the migration. It is possible that each record contains more data than needed. For improved performance, it is recommended to manually include only the fields that will be required for the migration. The following code snippet shows part of the local JSON file relevant to the node migration:

{

"data": {

"udm_people": [

{

"unique_id": 1,

"name": "Michele Metts",

"photo_file": "P01",

"book_ref": "B10"

},

{...},

{...}

]

}

}The array of records containing node data lies two levels deep in the hierarchy. Starting with data at the root and then descending one level to udm_people. Each element of this array is an object with four properties:

unique_idis the unique identifier for each record within thedata/udm_peoplehierarchy.nameis the name of a person. This will be used in the node title.photo_fileis the unique identifier of an image that was created in a separate migration.book_refis the unique identifier of a book paragraph that was created in a separate migration.

The following snippet shows the configuration to read a local JSON file for the node migration:

source:

plugin: url

data_fetcher_plugin: file

data_parser_plugin: json

urls:

- modules/custom/ud_migrations/ud_migrations_json_source/sources/udm_data.json

item_selector: data/udm_people

fields:

- name: src_unique_id

label: 'Unique ID'

selector: unique_id

- name: src_name

label: 'Name'

selector: name

- name: src_photo_file

label: 'Photo ID'

selector: photo_file

- name: src_book_ref

label: 'Book paragraph ID'

selector: book_ref

ids:

src_unique_id:

type: integerThe name of the plugin is url. Because we are reading a local file, the data_fetcher_plugin is set to file and the data_parser_plugin to json. The urls configuration contains an array of file paths relative to the Drupal root. In the example, we are reading from one file only, but you can read from multiple files at once. In that case, it is important that they have a homogeneous structure. The settings that follow will apply equally to all the files listed in urls.

The item_selector configuration indicates where in the JSON file lies the array of records to be migrated. Its value is an XPath-like string used to traverse the file hierarchy. In this case, the value is data/udm_people. Note that you separate each level in the hierarchy with a slash (/).

fields has to be set to an array. Each element represents a field that will be made available to the migration. The following options can be set:

nameis required. This is how the field is going to be referenced in the migration. The name itself can be arbitrary. If it contained spaces, you need to put double quotation marks (") around it when referring to it in the migration.labelis optional. This is a description used when presenting details about the migration. For example, in the user interface provided by the Migrate Tools module. When defined, you do not use the label to refer to the field. Keep using the name.selectoris required. This is another XPath-like string to find the field to import. The value must be relative to the location specified by theitem_selectorconfiguration. In the example, the fields are direct children of the records to migrate. Therefore, only the property name is specified (e.g.,unique_id). If you had nested objects or arrays, you would use a slash (/) character to go deeper in the hierarchy. This will be demonstrated in the image and paragraph migrations.

Finally, you specify an ids array of field names that would uniquely identify each record. As already stated, the unique_id field servers that purpose. The following snippet shows part of the process, destination, and dependencies configuration of the node migration:

process:

field_ud_image/target_id:

plugin: migration_lookup

migration: udm_json_source_image

source: src_photo_file

destination:

plugin: 'entity:node'

default_bundle: ud_paragraphs

migration_dependencies:

required:

- udm_json_source_image

- udm_json_source_paragraph

optional: []The source for the setting the image reference is src_photo_file. Again, this is the name of the field, not the label nor selector. The configuration of the migration lookup plugin and dependencies point to two JSON migrations that come with this example. One is for migrating images and the other for migrating paragraphs.

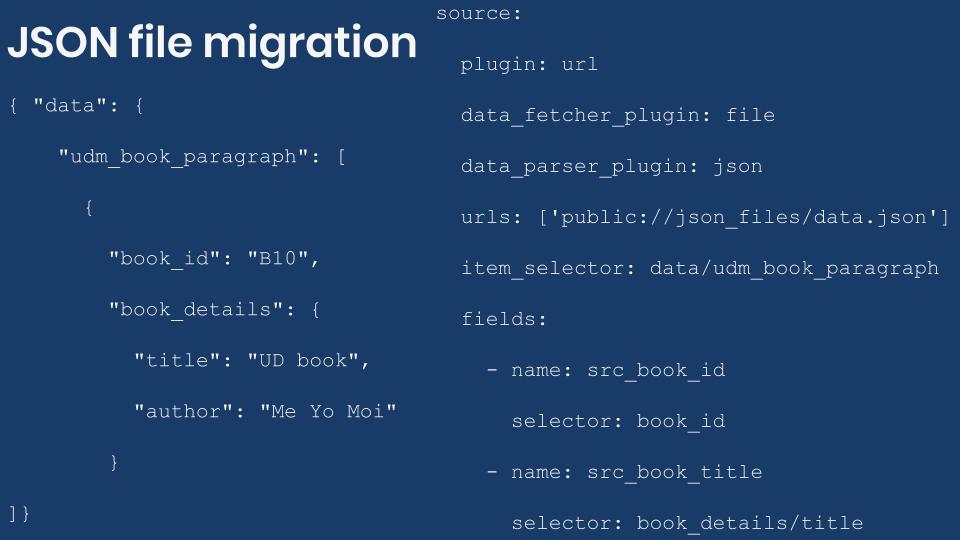

Migrating paragraphs from a JSON file

Let’s consider an example where the records to migrate have many levels of nesting. The following snippets show part of the local JSON file and source plugin configuration for the paragraph migration:

{

"data": {

"udm_book_paragraph": [

{

"book_id": "B10",

"book_details": {

"title": "The definite guide to Drupal 7",

"author": "Benjamin Melançon et al."

}

},

{...},

{...}

]

}

source:

plugin: url

data_fetcher_plugin: file

data_parser_plugin: json

urls:

- modules/custom/ud_migrations/ud_migrations_json_source/sources/udm_data.json

item_selector: data/udm_book_paragraph

fields:

- name: src_book_id

label: 'Book ID'

selector: book_id

- name: src_book_title

label: 'Title'

selector: book_details/title

- name: src_book_author

label: 'Author'

selector: book_details/author

ids:

src_book_id:

type: stringThe plugin, data_fetcher_plugin, data_parser_plugin and urls configurations have the same values as in the node migration. The item_selector and ids configurations are slightly different to represent the path to paragraph records and the unique identifier field, respectively.

The interesting part is the value of the fields configuration. Taking data/udm_book_paragraph as a starting point, the records with paragraph data have a nested structure. Notice that book_details is an object with two properties: title and author. To refer to them, the selectors are book_details/title and book_details/author, respectively. Note that you can go as many level deeps in the hierarchy to find the value that should be assigned to the field. Every level in the hierarchy would be separated by a slash (/).

In this example, the target is a single paragraph type. But a similar technique can be used to migrate multiple types. One way to configure the JSON file is to have two properties. paragraph_id would contain the unique identifier for the record. paragraph_data would be an object with a property to set the paragraph type. This would also have an arbitrary number of extra properties with the data to be migrated. In the process section, you would iterate over the records to map the paragraph fields.

The following snippet shows part of the process configuration of the paragraph migration:

process:

field_ud_book_paragraph_title: src_book_title

field_ud_book_paragraph_author: src_book_authorMigrating images from a JSON file

Let’s consider an example where the records to migrate have more data than needed. The following snippets show part of the local JSON file and source plugin configuration for the image migration:

{

"data": {

"udm_photos": [

{

"photo_id": "P01",

"photo_url": "https://agaric.coop/sites/default/files/pictures/picture-15-1421176712.jpg",

"photo_dimensions": [240, 351]

},

{...},

{...}

]

}

}

source:

plugin: url

data_fetcher_plugin: file

data_parser_plugin: json

urls:

- modules/custom/ud_migrations/ud_migrations_json_source/sources/udm_data.json

item_selector: data/udm_photos

fields:

- name: src_photo_id

label: 'Photo ID'

selector: photo_id

- name: src_photo_url

label: 'Photo URL'

selector: photo_url

ids:

src_photo_id:

type: stringThe plugin, data_fetcher_plugin, data_parser_plugin and urls configurations have the same values as in the node migration. The item_selector and ids configurations are slightly different to represent the path to image records and the unique identifier field, respectively.

The interesting part is the value of the fields configuration. Taking data/udm_photos as a starting point, the records with image data have extra properties that are not used in the migration. Particularly, the photo_dimensions property contains an array with two values representing the width and height of the image, respectively. To ignore this property, you simply omit it from the fields configuration. In case you wanted to use it, the selectors would be photo_dimensions/0 for the width and photo_dimensions/1 for the height. Note that you use a zero-based numerical index to get the values out of arrays. Like with objects, a slash (/) is used to separate each level in the hierarchy. You can go as far as necessary in the hierarchy.

The following snippet shows part of the process configuration of the image migration:

process:

psf_destination_filename:

plugin: callback

callable: basename

source: src_photo_urlJSON file location

When using the file data fetcher plugin, you have three options to indicate the location to the JSON files in the urls configuration:

- Use a relative path from the Drupal root. The path should not start with a slash (/). This is the approach used in this demo. For example,

modules/custom/my_module/json_files/example.json. - Use an absolute path pointing to the CSV location in the file system. The path should start with a slash (/). For example,

/var/www/drupal/modules/custom/my_module/json_files/example.json. - Use a stream wrapper.

Being able to use stream wrappers gives you many more options. For instance:

- Files located in the public, private, and temporary file systems managed by Drupal. This leverages functionality already available in Drupal core. For example:

public://json_files/example.json. - Files located in profiles, modules, and themes. You can use the System stream wrapper module or apply this core patch to get this functionality. For example,

module://my_module/json_files/example.json. - Files located in remote servers including RSS feeds. You can use the Remote stream wrapper module to get this functionality. For example,

https://understanddrupal.com/json-files/example.json.

Migrating remote JSON files

Migrate Plus provides another data fetcher plugin named http. You can use it to fetch files using the http and https protocols. Under the hood, it uses the Guzzle HTTP Client library. In a future blog post we will explain this data fetcher in more detail. For now, the udm_json_source_node_remote migration demonstrates a basic setup for this plugin. Note that only the data_fetcher_plugin and urls configurations are different from the local file example. The following snippet shows part of the configuration to read a remote JSON file for the node migration:

source:

plugin: url

data_fetcher_plugin: http

data_parser_plugin: json

urls:

- https://api.myjson.com/bins/110rcr

item_selector: data/udm_people

fields: ...

ids: ...And that is how you can use JSON files as the source of your migrations. Many more configurations are possible. For example, you can provide authentication information to get access to protected resources. You can also set custom HTTP headers. Examples will be presented in a future entry.

What did you learn in today’s blog post? Have you migrated from JSON files before? If so, what challenges have you found? Did you know that you can read local and remote files? Please share your answers in the comments. Also, I would be grateful if you shared this blog post with others.

Next: Migrating XML files into Drupal

This blog post series, cross-posted at UnderstandDrupal.com as well as here on Agaric.coop, is made possible thanks to these generous sponsors: Drupalize.me by Osio Labs has online tutorials about migrations, among other topics, and Agaric provides migration trainings, among other services. Contact Understand Drupal if your organization would like to support this documentation project, whether it is the migration series or other topics.