When creating an About page, think about your audience and anticipate the questions they have about your organization as well as what you want them to know about you.

Drutopia

Drutopia is a platform cooperative, technology owned and supported by its users. Currently we are focusing on two Drupal distributions for grassroots organizations- the one you are using right now, Drutopia Base, and Solidarity - a distribution for member-based organizations.

Drutopia Base

This distribution comes with features such as an accessible and responsive theme, popular content types and onsite fundraising . You can see a full list of features on the Modules administrative page.

We're also constantly improving Drutopia Base. You can see what features and improvements we're working on at our Roadmap page.

For questions on using the distribution, you can

- consult the Help pages

- ask a question in Drutopia Chat

- File a support ticket in the Drutopia GitLab Project

- email us at info@drutopia.org

Become a Member

Drutopia is democratic! Support the project and shape its future by becoming a member. Visit drutopia.org for more information.

Agaric is also offering full-day trainings for these topics later this month. Dates, prices, more details, and registration options:

MASS Design Group

Researching, building, and advocating for architecture that promotes justice and human dignity.

Links of Interest:

Lethal Autonomous Weapons Pledge - Future of Life Institute

Google's Code Of Ethics For AI Bans Its Use In Weaponry

Establishing an AI code of ethics will be harder than people think - MIT Technology Review

Elon Musk Trolls Zuckerberg: "You Don't Understand How A.I. Works"

We Won't Build Killer AI Weapons: Elon Musk And Deep Mind Founders Take Oath

Google Employees Resign in Protest Against Pentagon Contract

Microsoft's Ethical Reckoning Is Here | WIRED

Microsoft Calls For Federal Regulation of Facial Recognition | WIRED

https://en.wikipedia.org/wiki/Technical_standard

OpenCog - Wikipedia

Ethics of artificial intelligence - Wikipedia

As an example of personality attributes one can assess already using watson AI and twitter feeds - free course -

Get updates from Micky, Ben, and other Agarics on their involvement in lots of movement work. We will send you occasional dispatches from our perspective on various overlapping movements for freedom and justice, and the building of democracy thereby, as workers fighting the good fight and as passionate observers.

350.org es una organización global de justicia climática que ayudó a organizar la huelga climática más grande de la historia. Juntos, mejoramos su Mapa de Acción de Justicia Climática (CJAM) en el período previo a la huelga para que los activistas pudieran movilizar mejor a sus comunidades.

Teachers with GUTS

Empowering educators to teach science confidently.

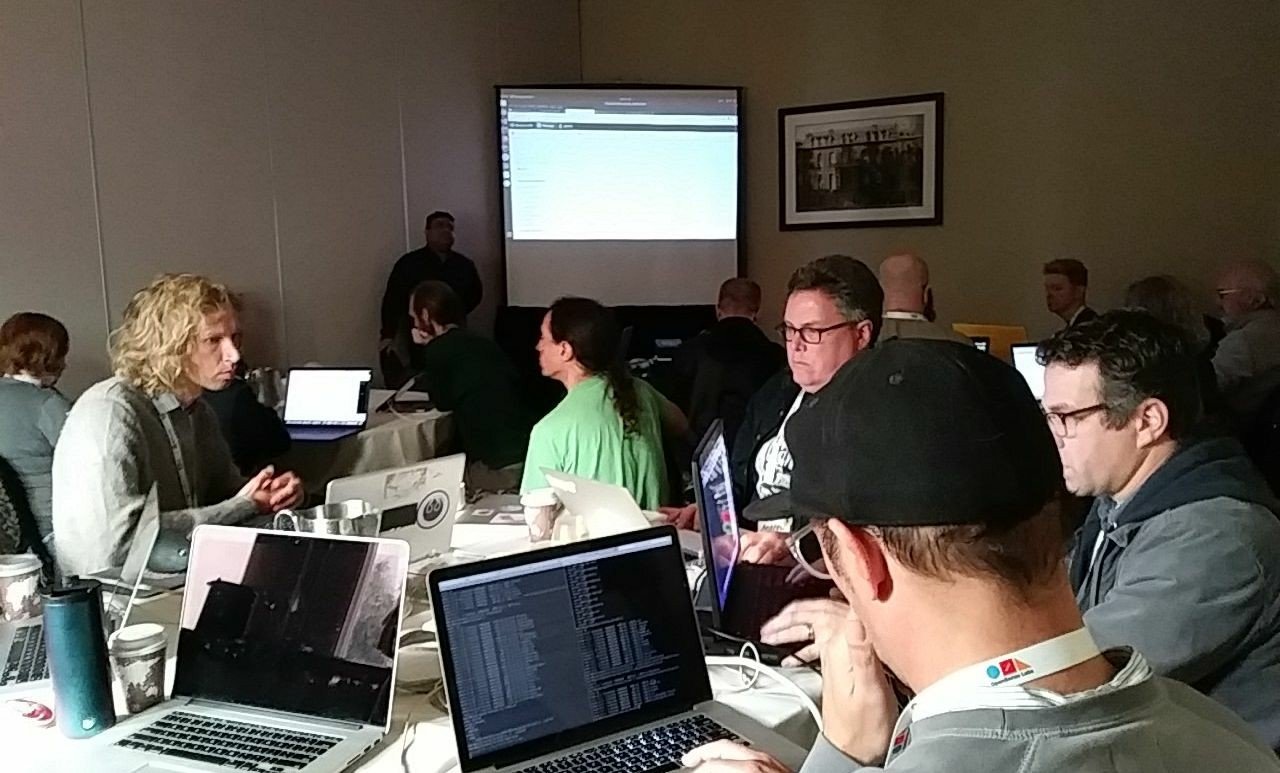

UPDATE: The DrupalCon Seattle training SOLD OUT a few days after we posted the details on the DrupalCon Seattle 2019 website! There were 45 trainees and six trainers - the Agaric team was joined by Leslie Glynn, the recipient of the 2019 Aaron Winborn Award, presented in the DriesNote! Leslie has been a volunteer at just about every Drupal event we have attended and she is well versed in many aspects of Drupal. It was a real pleasure to have her help on the mission to get people's hands and minds on the Drupal 8 migration process.

Maecenas commodo nisi sit amet eros lacinia vehicula. Aenean sollicitudin magna at lectus mollis feugiat. Etiam condimentum, massa sed rhoncus finibus, libero lectus interdum purus, vitae tempor quam odio ac turpis. Nullam gravida consectetur lacinia. Morbi eleifend facilisis auctor. Nam blandit dolor id sodales venenatis. Curabitur eget lorem est. Donec blandit interdum tortor et luctus. Etiam nisi ligula, sodales vitae lorem vel, maximus elementum dui. Suspendisse congue, urna at lobortis scelerisque, odio sapien euismod arcu, et fringilla est tortor porta nisl. Curabitur nec enim a neque condimentum sodales. Sed ac euismod leo, et condimentum velit. Suspendisse potenti. Nam dignissim faucibus odio, quis semper dui sollicitudin cursus.

TLDR: Drupal 7 has a much longer lifespan than the (already pushed back) official date, and Drupal 8 has an essentially infinite lifespan because it updates in-place to Drupal 9 easily (and the same will be true of Drupal 10, 11, ∞.). There's no reason to rush an upgrade— but there's no reason to wait either.

That's the short version.

A client recently wrote to Agaric about Drupal 7 / Drupal 8 / Drupal 9 project planning questions:

With the EOL for Drupal 7 in Nov of 2022, and the EOL for Drupal 8 in Nov 2021, is there a reason we should move a D7 site to D8 at all this year? Seems like we might want to move directly to D9? We don’t want to feel pushed up against a wall with a “new” site build in Drupal 8, if we can limp along in D7 for a couple more years while we develop a D9 site with a longer lifespan. I’m wondering if you might have time to discuss pros and cons briefly so we can get a good plan together for moving forward.

I started typing and almost did not stop:

-

No one believes me when i say this, but i repeat my assurance that Drupal 7 will be well-supported commercially until 2030 or later (Drupal 6, released in 2008, still has semi-official long term support until at least February 24th, 2022— and Drupal 7 has a larger install base than Drupal 6 ever did, and currently has the largest install base of any version of Drupal by far, with more than half a million tracked installs.

Drupal 7 will be supported by the community for a long time. You do not have to feel pushed to a new version, like, ever.

Migration experts

We are acknowledged experts in Drupal migrations, leading paid trainings at Drupal conferences and beyond. Mauricio Dinarte's 31 Days of Drupal Migrations blog series gives evidence for our passion for migrations, and we hope to continue living out that passion helping you migrate your site to modern Drupal!

A full range of services

Beyond migrating your data, we offer services for site-building, theming, design, and strategy analysis—everything required to ensure a successful migration and future for your new Drupal site.

Get in touch

E-mail us at ask@agaric.coop, call us at +1 508 283 3557, or use this form below, and one of us worker-owners at Agaric will get back to you.

test

Drupal 7 End of Life is November 2021, and while you can still get mileage from your site, and there will be community long term support for Drupal 7, there are many features in Drupal 8 (and soon Drupal 9) your organization's site will benefit from. Also, getting everything lined up for an upgrade takes time. This is the year many should be putting plans into motion.

At Agaric, we've made upgrades and migrations our main focus in our work to help people leverage the open web to meet their goals. Last year we led 4 migration trainings and presentations, including a sold-out training at DrupalCon. Mauricio even blogged every day in August to share his expertise on Drupal migrations. This year we're hitting the road to help as many people upgrade to Drupal 8 as possible.

Upcoming Trainings

- January 30 DrupalCamp New Jersey - Drupal 8 Upgrade All Day Training

- February 5 NYC Drupal Meetup - Drupal 8 Migrations by Example Presentation

- March 18 MidCamp - Drupal 8 Upgrade All Day Training

- May 18 DrupalCon Minneapolis - Drupal 8 Content Migrations All Day Training

- May 19 DrupalCon Minneapolis - Upgrading to Drupal 8 Using the Migrate API All Day Training

We also plan to propose sessions and trainings at DrupalCamp Spain, DrupalCamp Iceland, Drupal GovCon, DrupalCamp Colorado, DrupalCon Barcelona, BADCamp, and some other DrupalCamps.

Last year, many of our events sold out, so register ahead of time to take advantage of early bird rates and ensure you have a spot.

We're also available for paid consulting work and migration work. If interested, we would love to hear from you.

Resources

Not everyone can attend a training. We've tried as much as possible to also share our knowledge through blog posts and tutorials. Others in the Drupal community have also contributed documentation to make the upgrade/migration process easier.

- https://agaric.coop/migrations : clearinghouse of upcoming trainings, links, and other resources

- https://agaric.coop/31-days-drupal-migrations - step by step tutorials on migrations

- https://www.drupal.org/docs/8/upgrade - official Drupal documentation on upgrading to Drupal 8

An upgrade or migration can seem daunting, but we're committed, along with many other Drupal community members, to support one another in making everyone's upgrades as smooth as possible.