In Drupal 7 it was useful to do things like this:

function mymodule_content() {

$links[] = l('Google', 'http://www.google.com');

$links[] = l('Yahoo', 'http://www.yahoo.com');

return t('Links: !types', array('!types' => implode(', ', $links)));

}

In this case, we are using the exclamation mark to pass the $links into our string but unfortunately, Drupal 8 doesn't have this option in the FormattableMarkup::placeholderFormat(), the good news is that even without this there is a way to accomplish the same thing.

Today we will learn how to migrate addresses into Drupal. We are going to use the field provided by the Address module which depends on the third-party library commerceguys/addressing. When migrating addresses you need to be careful with the data that Drupal expects. The address components can change per country. The way to store those components also varies per country. These and other important consideration will be explained. Let’s get started.

Getting the code

You can get the full code example at https://github.com/dinarcon/ud_migrations The module to enable is UD address whose machine name is ud_migrations_address. The migration to execute is udm_address. Notice that this migration writes to a content type called UD Address and one field: field_ud_address. This content type and field will be created when the module is installed. They will also be removed when the module is uninstalled. The demo module itself depends on the following modules: address and migrate.

Note: Configuration placed in a module’s config/install directory will be copied to Drupal’s active configuration. And if those files have a dependencies/enforced/module key, the configuration will be removed when the listed modules are uninstalled. That is how the content type and fields are automatically created and deleted.

The recommended way to install the Address module is using composer: composer require drupal/address. This will grab the Drupal module and the commerceguys/addressing library that it depends on. If your Drupal site is not composer-based, an alternative is to use the Ludwig module. Read this article if you want to learn more about this option. In the example, it is assumed that the module and its dependency were obtained via composer. Also, keep an eye on the Composer Support in Core Initiative as they make progress.

Source and destination sections

The example will migrate three addresses from the following countries: Nicaragua, Germany, and the United States of America (USA). This makes it possible to show how different countries expect different address data. As usual, for any migration you need to understand the source. The following code snippet shows how the source and destination sections are configured:

source:

plugin: embedded_data

data_rows:

- unique_id: 1

first_name: 'Michele'

last_name: 'Metts'

company: 'Agaric LLC'

city: 'Boston'

state: 'MA'

zip: '02111'

country: 'US'

- unique_id: 2

first_name: 'Stefan'

last_name: 'Freudenberg'

company: 'Agaric GmbH'

city: 'Hamburg'

state: ''

zip: '21073'

country: 'DE'

- unique_id: 3

first_name: 'Benjamin'

last_name: 'Melançon'

company: 'Agaric SA'

city: 'Managua'

state: 'Managua'

zip: ''

country: 'NI'

ids:

unique_id:

type: integer

destination:

plugin: 'entity:node'

default_bundle: ud_addressNote that not every address component is set for all addresses. For example, the Nicaraguan address does not contain a ZIP code. And the German address does not contain a state. Also, the Nicaraguan state is fully spelled out: Managua. On the contrary, the USA state is a two letter abbreviation: MA for Massachusetts. One more thing that might not be apparent is that the USA ZIP code belongs to the state of Massachusetts. All of this is important because the module does validation of addresses. The destination is the custom ud_address content type created by the module.

Available subfields

The Address field has 13 subfields available. They can be found in the schema() method of the AddresItem class. Fields are not required to have a one-to-one mapping between their schema and the form widgets used for entering content. This is particularly true for addresses because input elements, labels, and validations change dynamically based on the selected country. The following is a reference list of all subfields for addresses:

langcodefor language code.country_codefor country.administrative_areafor administrative area (e.g., state or province).localityfor locality (e.g. city).dependent_localityfor dependent locality (e.g. neighbourhood).postal_codefor postal or ZIP code.sorting_codefor sorting code.address_line1for address line 1.address_line2for address line 2.organizationfor company.given_namefor first name.additional_namefor middle name.family_namefor last name:

Properly describing an address is not trivial. For example, there are discussions to add a third address line component. Check this issue if you need this functionality or would like to participate in the discussion.

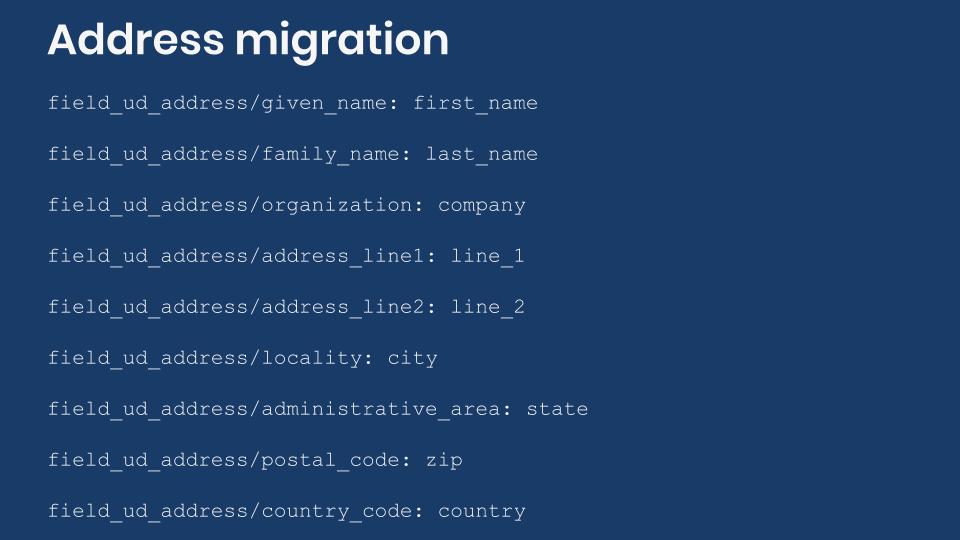

Address subfield mappings

In the example, only 9 out of the 13 subfields will be mapped. The following code snippet shows how to do the processing of the address field:

field_ud_address/given_name: first_name

field_ud_address/family_name: last_name

field_ud_address/organization: company

field_ud_address/address_line1:

plugin: default_value

default_value: 'It is a secret ;)'

field_ud_address/address_line2:

plugin: default_value

default_value: 'Do not tell anyone :)'

field_ud_address/locality: city

field_ud_address/administrative_area: state

field_ud_address/postal_code: zip

field_ud_address/country_code: countryThe mapping is relatively simple. You specify a value for each subfield. The tricky part is to know the name of the subfield and the value to store in it. The format for an address component can change among countries. The easiest way to see what components are expected for each country is to create a node for a content type that has an address field. With this example, you can go to /node/add/ud_address and try it yourself. For simplicity sake, let’s consider only 3 countries:

- For USA, city, state, and ZIP code are all required. And for state, you have a specific list form which you need to select from.

- For Germany, the company is moved above first and last name. The ZIP code label changes to Postal code and it is required. The city is also required. It is not possible to set a state.

- For Nicaragua, the Postal code is optional. The State label changes to Department. It is required and offers a predefined list to choose from. The city is also required.

Pay very close attention. The available subfields will depend on the country. Also, the form labels change per country or language settings. They do not necessarily match the subfield names. Moreover, the values that you see on the screen might not match what is stored in the database. For example, a Nicaraguan address will store the full department name like Managua. On the other hand, a USA address will only store a two-letter code for the state like MA for Massachusetts.

Something else that is not apparent even from the user interface is data validation. For example, let’s consider that you have a USA address and select Massachusetts as the state. Entering the ZIP code 55111 will produce the following error: Zip code field is not in the right format. At first glance, the format is correct, a five-digits code. The real problem is that the Address module is validating if that ZIP code is valid for the selected state. It is not valid for Massachusetts. 55111 is a ZIP code for the state of Minnesota which makes the validation fail. Unfortunately, the error message does not indicate that. Nine-digits ZIP codes are accepted as long as they belong to the state that is selected.

Note: If you are upgrading from Drupal 7, the D8 Address module offers a process plugin to upgrade from the D7 Address Field module.

Finding expected values

Values for the same subfield can vary per country. How can you find out which value to use? There are a few ways, but they all require varying levels of technical knowledge or access to resources:

- You can inspect the source code of the address field widget. When the country and state components are rendered as select input fields (dropdowns), you can have a look at the

valueattribute for theoptionthat you want to select. This will contain the two-letter code for countries, the two-letter abbreviations for USA states, and the fully spelled string for Nicaraguan departments. - You can use the Devel module. Create a node containing an address. Then use the

develtab of the node to inspect how the values are stored. It is not recommended to have thedevelmodule in a production site. In fact, do not deploy the code even if the module is not enabled. This approach should only be used in a local development environment. Make sure no module or configuration is committed to the repo nor deployed. - You can inspect the database. Look for the records in a table named

node__field_[field_machine_name], if migrating nodes. First create some example nodes via the user interface and then query the table. You will see how Drupal stores the values in the database.

If you know a better way, please share it in the comments.

The commerceguys addressing library

With version 8 came many changes in the way Drupal is developed. Now there is an intentional effort to integrate with the greater PHP ecosystem. This involves using already existing libraries and frameworks, like Symfony. But also, making code written for Drupal available as external libraries that could be used by other projects. commerceguys\addressing is one example of a library that was made available as an external library. That being said, the Address module also makes use of it.

Explaining how the library works or where its fetches its database is beyond the scope of this article. Refer to the library documentation for more details on the topic. We are only going to point out some things that are relevant for the migration. For example, the ZIP code validation happens at the validatePostalCode() method of the AddressFormatConstraintValidator class. There is no need to know this for a migration project. But the key thing to remember is that the migration can be affected by third-party libraries outside of Drupal core or contributed modules. Another example, is the value for the state subfield. Address module expects a subdivision as listed in one of the files in the resources/subdivision directory.

Does the validation really affect the migration? We have already mentioned that the Migrate API bypasses Form API validations. And that is true for address fields as well. You can migrate a USA address with state Florida and ZIP code 55111. Both are invalid because you need to use the two-letter state code FL and use a valid ZIP code within the state. Notwithstanding, the migration will not fail in this case. In fact, if you visit the migrated node you will see that Drupal happily shows the address with the data that you entered. The problems arrives when you need to use the address. If you try to edit the node you will see that the state will not be preselected. And if you try to save the node after selecting Florida you will get the validation error for the ZIP code.

This validation issues might be hard to track because no error will be thrown by the migration. The recommendation is to migrate a sample combination of countries and address components. Then, manually check if editing a node shows the migrated data for all the subfields. Also check that the address passes Form API validations upon saving. This manual testing can save you a lot of time and money down the road. After all, if you have an ecommerce site, you do not want to be shipping your products to wrong or invalid addresses. ;-)

Technical note: The commerceguys/addressing library actually follows ISO standards. Particularly, ISO 3166 for country and state codes. It also uses CLDR and Google's address data. The dataset is stored as part of the library’s code in JSON format.

Migrating countries and zone fields

The Address module offer two more fields types: Country and Zone. Both have only one subfield value which is selected by default. For country, you store the two-letter country code. For zone, you store a serialized version of a Zone object.

What did you learn in today’s blog post? Have you migrated address before? Did you know the full list of subcomponents available? Did you know that data expectations change per country? Please share your answers in the comments. Also, I would be grateful if you shared this blog post with others.

Next: Introduction to paragraphs migrations in Drupal

This blog post series, cross-posted at UnderstandDrupal.com as well as here on Agaric.coop, is made possible thanks to these generous sponsors: Drupalize.me by Osio Labs has online tutorials about migrations, among other topics, and Agaric provides migration trainings, among other services. Contact Understand Drupal if your organization would like to support this documentation project, whether it is the migration series or other topics.

Healthy Communities

Online Homes for Scientific and Medical Advancement

We are Agaric, a web development collective eager to understand where you want to go with your web presence and to apply our knowledge and skills to get you there.

Because we are a worker-owned cooperative, every person you interact with at Agaric is an owner of the company. Because we are part of the Free Software movement, we build on tools and frameworks created and tested by thousands of people. Our goal is to give you power over your web sites and online technology.

Have something you think we may be able to help with? Just ask.

I had a great time at BioRAFT Drupal Nights, on January 16th, 2014. Originally Chris Wells (cwells) was scheduled to speak, unfortunately he was down with the flu. Michelle Lauer (Miche) put an awesome presentation together on really short notice. With help from Diliny Corlesquet (Dcor) there was plenty to absorb intellectually along with the delicious Middle Eastern food. I love spontaneity, so it was fun to hop over to BioRAFT in Cambridge, MA, to be a member of a panel of Senior Drupal Developers.

I joined Seth Cohn, Patrick Corbett, Michelle Lauer and Erik Peterson to present some viable solutions to performance issues in Drupal.

Several slides covered the topic - Drupal High Performance and a lively discussion ensued. The panel members delved into some of the top issues with Drupal performance under several different conditions. The discussion was based on the book written by Jeff Shelton, Naryan Newton, and Nathaniel Catchpole - High Performance Drupal - O'Reilly - http://shop.oreilly.com/product/0636920012269.do .

The room at BioRAFT was comfortably full with just the right amount of people to have an informal Q and A, and to directly address some concerns that developers were actually working on. Lurking in the back I spotted some of the top developers in the Drupal community (scor, mlncn, kay_v just to name a few) that were not on the panel, but they did had a lot of experience to speak from during Q and A.

The video posted at the bottom is packt with great information and techniques - Miche even shared some code snippets that will get you going if you seek custom performance enhancements.

One of the discussions was around tools that can be used to gauge performance or tweak it.

- Drupals internal syslog

- watchdog - dblog

- Acquia has some tools

- Google Analytics

I also found an online test site: http://drupalsitereview.com

We also talked a lot about caching and the several options that exist to deal with cache on a Drupal site.

There are options to cache content for anonymous users and there are solutions and modules for more robust sites with high traffic.

PHP 5.5 offers a good caching solution with integrated opcode caching. They are a performance enhancement and extension for PHP. Some sites have shown a 3x performance boost by using opcode caching. Opcode caching presents no side-effects beyond extra memory usage and should always be used in production environments. Below are a couple of the suggestions we discussed that are listed in the Modules listed on Drupal.org:

- Boost

- a good solution for sites that have a lot of content for anonymous users. https://drupal.org/project/boost

- Varnish

- a good choice for sites with content for members https://drupal.org/project/varnish

- Others

-

- Memcache

- Redis

- File Cache

The panel agreed that there are many things to consider when seeking to improve the sites performance:

- Hosting

- Custom Code

- Cache

- Memory Limits

- Traffic

- Module Compatibility

- CSS and JS Aggregation

A few more are discussed in the video below...

Sending out Be Wells to cwells! We look forward to a future presentation, and we are also pleased that on such short notice it did not seem too hard to gather a panel of several senior Drupal developers to discuss High Performance Drupal. See the whole discussion on YouTube

According to the documentation:

- @variable: When the placeholder replacement value is:

- A string, the replaced value in the returned string will be sanitized using \Drupal\Component\Utility\Html::escape().

- A MarkupInterface object, the replaced value in the returned string will not be sanitized.

- A MarkupInterface object cast to a string, the replaced value in the returned string be forcibly sanitized using\Drupal\Component\Utility\Html::escape().

Using the @ as the first character escapes the variable if it is a string but if we pass a MarkupInterface object it is not going to be sanitized, so we just need to return an object of this type.

And Drupal 8 has a class that implements MarkupInterface called: Markup, so the code above for Drupal 8 will look like this:

public function content() {

$markup = new Markup();

$links[] = Link::fromTextAndUrl('Google', Url::fromUri('http://www.google.com'))->toString();

$links[] = Link::fromTextAndUrl('Yahoo', Url::fromUri('http://www.yahoo.com'))->toString();

$types = $markup->create(implode(', ', $links));

return [ '#markup' => t('Links %links', ['%links' =>$types]), ];

}

And that's it, our string will display the links.

If you want to see a real scenario where this is helpful, check this Comment Notify code For Drupal 7 and this patch for the D8 version.

Sign up if you want to know when Mauricio and Agaric give a migration training:

Benjamin Melançon of Agaric helped with a patch for the Drupal 7 version of Insert module.

Here at Agaric we work a lot with install profiles and, more often than not, we have to provide default content. This is mostly taxonomy terms, menu links, and sometimes even nodes with fields. Recently, I have started to use Migrate to load that data from JSON files.

Migrate is usually associated with importing content from a legacy website into Drupal, either from a database or files. Loading initial data is just a special case of a migration. Because it handles many kinds of data sources with a minimum of configuration effort, Migrate is well suited for the task.

Here is an example from our project Find It Cambridge. It is a list of terms I would like to add to a vocabulary, stored in a JSON file.

[

{

"term_name": "Braille",

"weight": 0

},

{

"term_name": "Sign language",

"weight": 1

},

{

"term_name": "Translation services provided",

"weight": 2

},

{

"term_name": "Wheelchair accessible",

"weight": 3

}

]

If you do not need a particular order for the terms in the vocabularies, you can skip the weight definition. In the next code snippet we specify a default value of 0 for the weight. In such a case, Drupal will list the terms alphabetically.

Migrate does almost all the work for us— we just need to create a Migration class and configure it using the constructor. For a single JSON file the appropriate choice for the source is MigrateJSONSource. The destination is an instance of MigrateDestinationTerm. Migrate requires a data source to have a primary key which is provided via MigrateSQLMap. In this case term_name is defined as the primary key:

class TaxonomyTermJSONMigration extends Migration {

public function __construct($arguments) {

parent::__construct($arguments);

$this->map = new MigrateSQLMap(

$this->machineName,

array(

'term_name' => array(

'type' => 'varchar',

'length' => 255,

'description' => 'The term name.',

'not null' => TRUE,

),

),

MigrateDestinationTerm::getKeySchema()

);

$this->source = new MigrateSourceJSON($arguments['path'], 'term_name');

$this->destination = new MigrateDestinationTerm($arguments['vocabulary']);

$this->addFieldMapping('name', 'term_name');

$this->addFieldMapping('weight', 'weight')->defaultValue(0);

}

}

This migration class expects to find the vocabulary machine name and the location of the JSON file in the $arguments parameter of the constructor. Those parameters are passed to Migration::registerMigration. Registration and processing can be handled during installation of the profile. Because there are several vocabularies to populate I have defined a function:

function findit_vocabulary_load_terms($machine_name, $path) {

Migration::registerMigration('TaxonomyTermJSONMigration', $machine_name, array(

'vocabulary' => $machine_name,

'path' => $path,

));

$migration = Migration::getInstance($machine_name);

$migration->processImport();

}

This function is called in our profile's implementation of hook_install with the path and vocabulary machine name for each vocabulary. The file is stored at profiles/findit/accessibility_options.json relative to the Drupal installation directory. The following snippet is an extract from our install profile that demonstrates creating the vocabulary and using above function to add the terms.

function findit_install() {

…

$vocabularies = array(

…

'accessibility_options' => st('Accessibility'),

…

);

…

foreach ($vocabularies as $machine_name => $name) {

findit_create_vocabulary($name, $machine_name);

findit_vocabulary_load_terms($machine_name, dirname(__FILE__) . "/data/" . $machine_name.json);

}

…

}

Executing drush site-install findit will set up content types, vocabularies, and create the taxonomy terms.

In the past I have used Drupal's API to create taxonomy terms, menu links, and other content, which also works well and does not add the mental overhead of another tool. But the Migrate approach has one key benefit in my opinion: it provides a well defined way of separating data from the means to import it and enables the developer to easily handle more complex tasks like nodes with fields. Compare the above approach of importing taxonomy terms to the following equivalent code:

$terms = array(

array('vocabulary_machine_name' => 'accessibility_options', 'name' => 'Braille', 'weight' => 0),

array('vocabulary_machine_name' => 'accessibility_options', 'name' => 'Sign language', 'weight' => 1),

array('vocabulary_machine_name' => 'accessibility_options', 'name' => 'Translation services provided', 'weight' => 2),

array('vocabulary_machine_name' => 'accessibility_options', 'name' => 'Wheelchair accessible', 'weight' => 3),

);

foreach ($terms as $term_data) {

$term = (object) $term_data

taxonomy_term_save($term);

}

Even though one can write the code in a style that takes care of separating code and data, it gets more complicated with less intuitive APIs. My preference is to have content in a separate file and rely on a well tested tool for importing it. Using Migrate and JSON files is a convenient and powerful solution to this end. What is your approach to providing default content?

Agaric organiza una reunión semanal en línea conocida como "Show and Tell". Los participantes comparten consejos y trucos que hemos aprendido y plantean preguntas a otros desarrolladores sobre tareas o proyectos en los que estamos trabajando. Cada semana pedimos a la gente que nos envíe un poco de información sobre lo que les gustaría presentar. Esto no es un requisito previo, sólo una sugerencia. Tener un aviso previo de las presentaciones nos permite correr la voz a otros que puedan estar interesados, pero usted puede simplemente presentarse, y lo más probable es que haya tiempo para presentar durante 5-10 minutos. Puede inscribirse en la lista de correo de "Show and Tell" y será notificado de los próximos eventos.

Recientemente hemos abierto el chat de "Show and Tell" para vincularnos con otras cooperativas que hacen trabajos de desarrollo web. Agaric fue contactada por miembros de Fiqus.coop en Argentina, ya que ellos ya habían comenzado con una iniciativa para conocer a otros desarrolladores de cooperativas y asi, compartir valores y metas. Nadie había enviado un aviso de presentación, así que cambiamos el tema del chat para que fuera más un encuentro y un saludo para conocernos mejor con el objetivo de poder compartir nuestro trabajo en los proyectos. El valor de la reunión se hizo evidente de inmediato cuando nos adentramos en la conversación con algunos miembros de Fiqus.

A continuación, invitamos a más desarrolladores a participar en la discusión y se abrieron las puertas para compartir más profundamente y conectar. Esta semana nuestra reunión fue exagerada! Nicolás Dimarco nos guió a través de una corta presentación de diapositivas que reveló un proceso y flujo de trabajo federado para compartir el desarrollo con los miembros de múltiples cooperativas. El plan es tan simple que todos lo entendieron inmediatamente. La conversación que siguió fue convincente, las preguntas fueron indicativas de dónde necesitamos educar a los demás sobre los principios cooperativos vs. las tácticas corporativas. Necesitamos más discusión sobre la confianza y la amistad. Hay tantos desarrolladores en trabajos corporativos que me han preguntado cómo funciona una cooperativa de desarrollo web y cómo funciona un proyecto sin un gerente. Primero, me gusta explicar que los proyectos tienen gerentes, pero ellos están manejando el trabajo, no a la gente. Tomarse el tiempo para conocer las habilidades y pasiones de cada uno sobre la programación es una parte esencial para poder trabajar juntos en una Federación. Fiqus.coop ha hecho que sea sencillo para todos ver el camino para compartir el trabajo en los proyectos!

Aquí está el enlace a la grabación de video del chat donde Nicolás Dimarco de Fiqus.coop presenta la fórmula de trabajo federado entre cooperativas. Aquí hay un enlace a las notas de la reunión del 20/3/2019 y algunas reuniones pasadas de Show and Tell.

Más información sobre el Show and Tell.

Algunas tiendas de Drupal ya trabajan juntas en proyectos y podemos ayudar a que crezcan compartiendo nuestras experiencias. Nos encantaría saber cómo trabajan y los procesos que han descubierto que hacen que compartir el trabajo en los proyectos sea un éxito!

I’ve been working on open source projects for a long time and contributing to Drupal for 6 years now.

And I want to share my experience and the things that helped me contribute to Drupal.

Where you can help:

I think one of the first problems I had to face when I started contributing was picking up an issue from the Drupal issue queue and to start working on it. When I started, all the issues seemed very hard or complex (and some are), fortunately there are a list of issues for people who want to start contributing to Drupal, these issues have the Novice tag. The idea of these issues is for someone to feel the experience of working on an issue.

What you need to know

Some of the things to learn while working on novice issues are:

- Use git

- Learn to create a patch using git

- When a ticket is old and the patch doesn’t apply anymore it is necessary to reroll the patch, that is a very common thing on the issue queue and is just updating the patch so it can be applied to the project again.

- Every time a new patch is uploaded, it is necessary to provide an interdiff. An interdiff is just a file showing what changed from the previous patch to the new one. This is a very important thing as it helps a lot for the reviewer to know what has changed. This is especially important if it is a big patch.

- Use the Drupal Coding Standards, and the easiest way to follow along is by using PHPCS and later integrate it with your IDE

- The changes must pass the "Core Gates" which essentially are checklists of things that every contribution must have in order to keep the quality of the code up to standards.

Don’t work on novice issues forever

While working on novice issues is a good way to start, it is necessary to jump to issues not marked as novice as soon as we feel comfortable with the things listed above. The non-novice issues are where we can really learn how Drupal works.

A few ways to start working on non-novice issues are:

- reviewing patches from other users, so you can learn how a change is done and start checking how the core works, reading code from others is a great way to improve your abilities as developer and at the same time help to keep moving the issues along, so they can be fixed.

- Another way to contribute is by writing tests, every patch with a fix must have a test to avoid regressions. While writing tests sounds like a hard thing to do, Drupal 8 help us a lot with this. There is a whole page written about the topic. Also the Examples module does not just provide examples of how to do things with Drupal but also how to test them, so keep that module at hand as it is a great resource. Drupal 8 has thousands of tests already, so for sure there is at least one which is very similar to what you need to do.

- Pick an issue related to the component you want to know more about, Drupal 8 has a lot of components, and if you want to know how a specific part works just pick one of its bugs and work on it. Don’t worry if the bug seems easy to fix in the issue but in the code it is not, that is normal. Before you start fixing the bug, it is necessary to understand what the problem is and part of that is learning how that specific part of the component works. So when finally a patch can be provided for that issue you will now know how that component works. This is (for me) the best way to learn. While you help Drupal to be more stable, at the same time you gain understanding on a very deep level of how Drupal works.

- Be your own critic while you are working on client work, if there is something which doesn’t seem right, don’t hesitate to look at an issue with that problem before you do a workaround, maybe someone has already written a patch and it might just need a reroll, a review or a test. Sometimes you can help while working on a project. At Agaric we recently found a bug while working on a project and it was a 6 year old issue that just needed a review and an extra test. Now it is fixed, for us and for everyone.

Final comments

When feeling frustrated while working on an issue, remember the Thomas Edison quote "Genius is one percent inspiration, ninety-nine percent perspiration", while we keep trying and keep working and asking questions and trying new things, just don’t give up, eventually we will make it happen. When someone starts contributing, it is normal to feel like they are not good enough. Just keep trying!

Remember, contributing for most of the people is an unpaid labor, don’t feel disappointed if there is an issue where you spent a good amount of time and no one reviews it, there are issues that have been around for years which aren’t committed, but even if they aren’t, the next developer with your same problem will find your patch and use it. So even if your code is not part of a module/core it still helps.

Going to conferences and meeting Drupalistas is a good way to keep you motivated and to learn new things. It is fun to meet in person the Drupal.org users who helped you in the issue queue.

Another thing that might help to keep you motivated is to see your name at DrupalCores.com. There you can see a list of users and mentions: for every new mention/contribution your nick will gain a few place in that rank.

Join groups that will keep you informed

Take the first steps— stay in the know!

- Join the Tech Workers Coalition — in particular, sign up for the weekly newsletter.

- Get updates from Micky and the Agarics on their involvement in lots of movement work.

Pagination

- Previous page

- Page 11